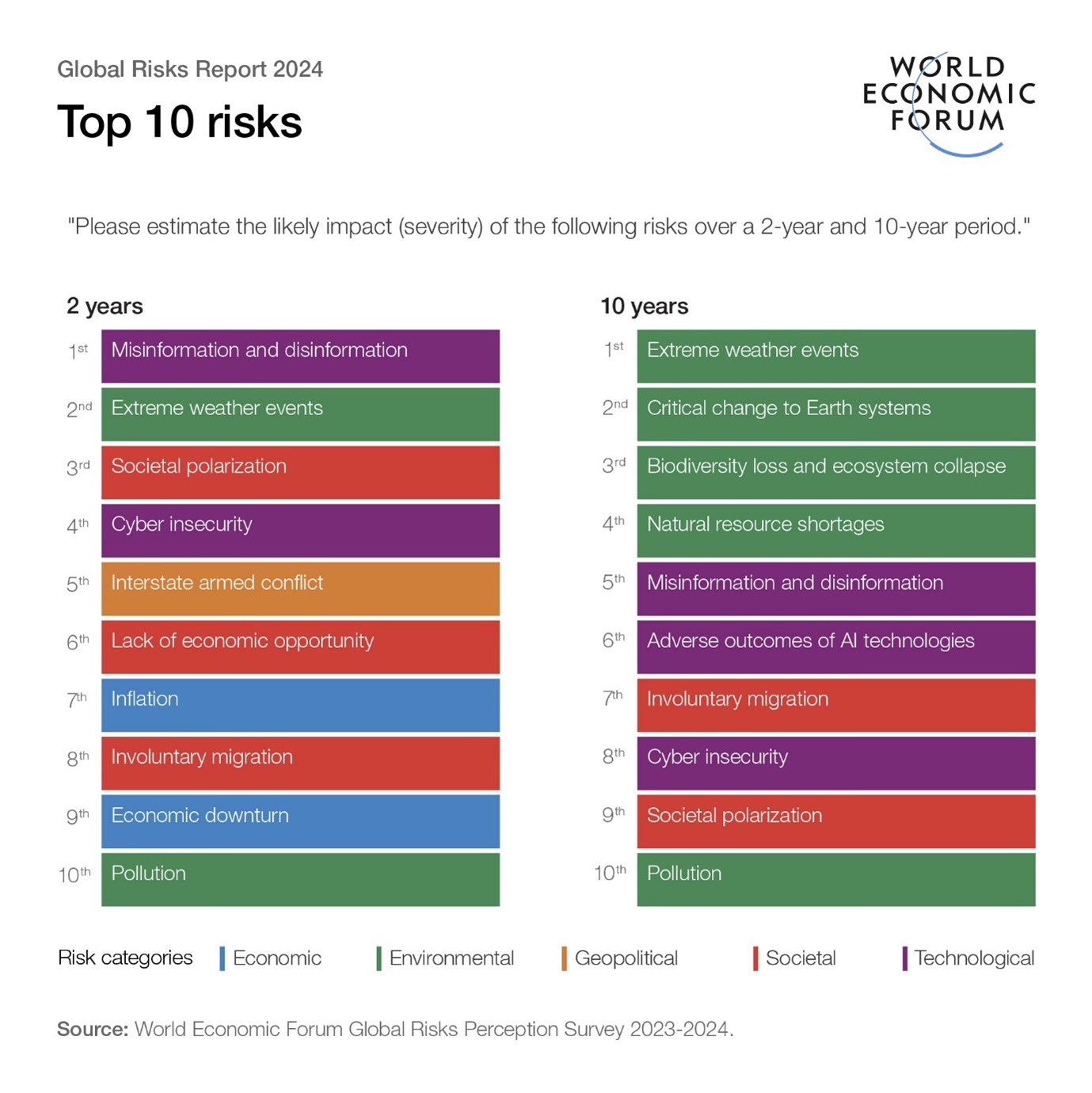

According to the World Economic Forum’s Global Risks Report 2024, misinformation and disinformation have emerged as the most severe global risk over the next two years, and the fifth most severe over the next 10 years.

30–39-year-olds are the age group that are least concerned about it but it’s still a top three risk for them, whereas it comes only behind extreme weather for the top concern in 2024 for all age groups. There are also growing fears that the widespread use of misinformation and disinformation may undermine the legitimacy of newly elected governments and officials.

The report also suggests that the spread of mis- and disinformation could drive government-driven censorship, propaganda, and controls on the flow of information.

As billions of people head to the polls this year, how can people be sure that they are making an informed choice, that isn’t influenced by mis- and disinformation?

Although disinformation is often blamed for swaying elections – the research says something else.

The rule of five

If you’re trying to determine whether something you’ve read is fact or fiction, it’s good to not only double check, but triple check too. If a story is being shared by multiple reputable sources, the greater the likelihood that it is true.

We like to use the rule of five to ensure that you are accessing multiple sources and challenging your pre-existing biases. Impartiality is important and confirmation bias is a potential issue that is very real.

Unless we actively seek out alternative sources of information, we are likely to see more of the information that we already align with, and therefore deepen our confirmation bias.

Any easy way to combat this is to find five sources from five perspectives to help you broaden your mind and think critically.

Ultimately, you cannot do this for everything you read, or everything that you’re told, but you should practice this skill before sharing information, especially on social media.

The more you practice it, the more relevant the skills become and the easier it gets to spot bias.